Following the release of OpenAI’s ChatGPT large language model, generative artificial intelligence has exploded in popularity and many institutions have been integrating the technology into their products and processes. As explained by Nvidia, a world leader in artificial intelligence, large language models (LLMs) are a form of deep learning algorithm – a type of artificial neural network inspired by the human brain to identify patterns and make predictions – that can “recognize, summarize, translate, predict and generate text and other forms of content based on knowledge gained from massive datasets.” At their core, large language models are powered by a backbone of transistor models, neural networks that learn the complex relationships between sequential data like human languages, programming languages, and more. Large language models are trained on increasingly massive datasets to enrich them with more contextual information. OpenAI’s GPT-3, the LLM that powered the 2020 release version of ChatGPT, contains over 175 billion parameters, numeric weights and biases that inform how the model makes predictions. Transistors and extensive training data allow for large language models to be exceptionally versatile in both professional and personal contexts.

As tens of millions of users have realized since the public release of ChatGPT in 2020, large language models are capable of completing a vast range of language processing tasks. Users have discovered that large language models such as ChatGPT can write songs, poems, stories, and essays. ChatGPT is also capable of recommending workout routines, giving relationship advice, and suggesting recipes. However, leading experts in artificial intelligence claim that large language models have even more potential. Nvidia claims that along with potentially revolutionizing search engines, tutoring services, and marketing campaigns, large language models could transform countless other industries.

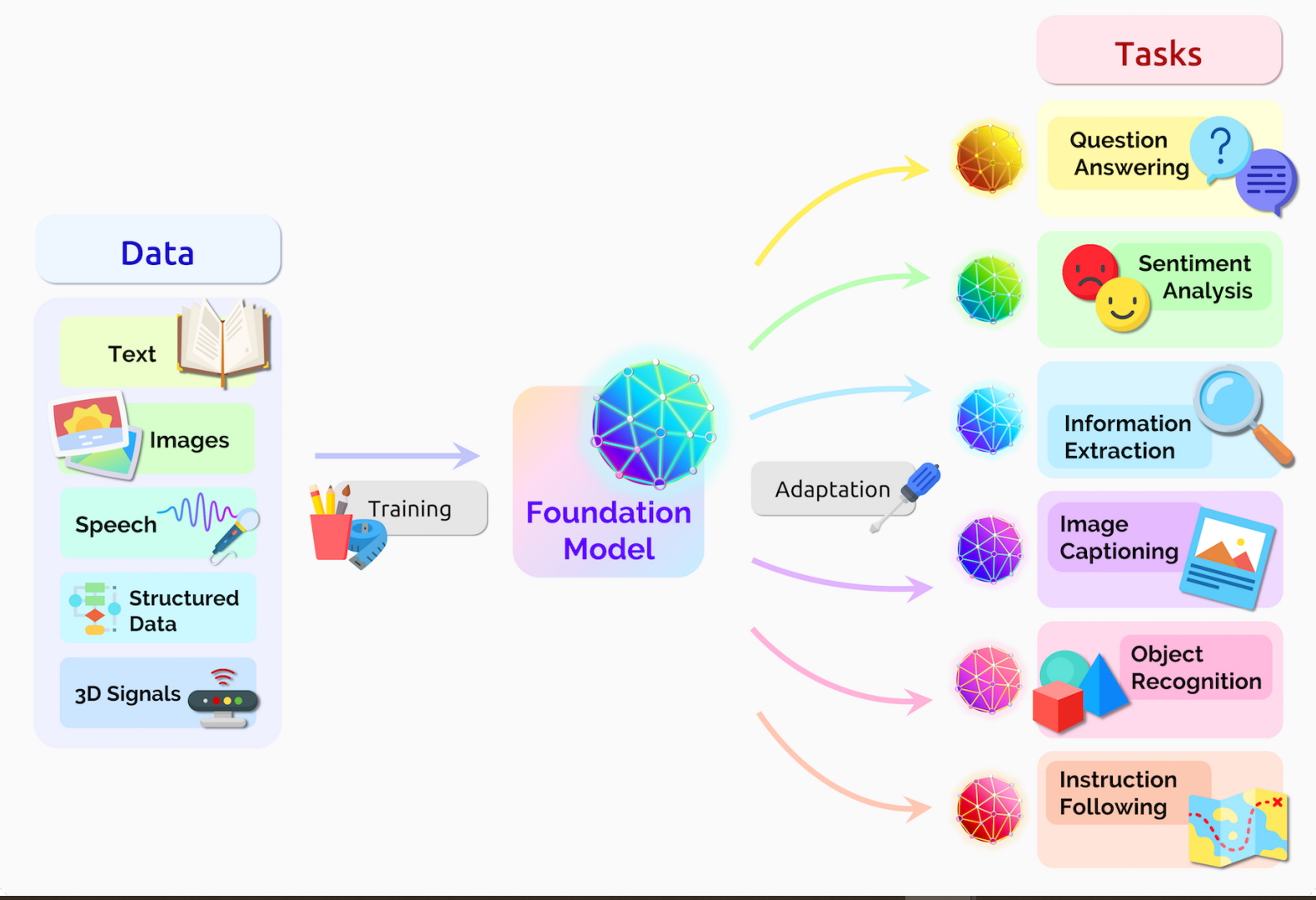

Beyond general use cases, transistor models also allow for large language models to be further customized for more focused use cases, though, large language models are immensely complex, meaning that building and maintaining foundational models from scratch can be excessively challenging and extremely expensive. Acquiring the substantial quantities of training data required to build a foundational model necessitates expansive resources and may not be feasible for all developers. Even if the required training data is obtained, the training process can take many months and hundreds of millions of dollars of AI infrastructure. For example, each of the thousands of Nvidia A100 and H100 compute GPUs that power ChatGPT retail for $10,000 and $30,000 respectively. MetaAI’s LLaMa large language model required over 3.3 million GPU hours of training. Rather than building an entirely new model from scratch, developers can fine-tune a pre-trained model to suit their use case better.

Fine-tuning requires less financial backing, time investment, and technical expertise, causing it to emerge as a highly scrutinized subject of global research. Experts in computer science, data science, and fields such as biology, health, and aviation envision a future driven by fine-tuned large language models. However, large language models remain volatile and often unpredictable, raising concerns about effectiveness and safety. Therefore, the question remains: are large language models a safe and effective replacement for existing systems?

Since the release of Google’s Bidirectional Encoder Representations from the Transformers (BERT) family of LLMs in 2018, fine-tuning emerged as a common benchmark of natural language processing experiments. BERT’s base model was trained on 110 million parameters while its large model was trained on 340 million, both less than 1% of the number of parameters used to train GPT-3 (3). In aviation, computer science, data science, and researchers from the School of Aerospace Engineering at Georgia Institute of Technology examined the potential of both models as aviation safety analysis amid rising numbers of reported incidents, publishing their findings. Aviation safety is critical, especially as air traffic expands, and incident reports must be comprehensive and carefully analyzed to ensure safety. However, current methods of generating and processing reports can require as many as five business days. To increase the efficiency of incident reporting and processing, researchers propose the application of ChatGPT.

In the system developed by the researchers, ChatGPT analyzes incident narratives, identifies the human errors that contributed to incidents, and identifies the entities responsible. Using this information, ChatGPT generates a summary of the incident. The quality of these summaries was compared to preexisting fine-tuned BERT models and to human analysts.

Researchers concluded that ChatGPT is not a suitable replacement for human analysts, stating that in cases where human error was attributed to incidents, human analysts agreed with ChatGPT’s classification of the incident only 61% percent of the time (21). Instead, researchers propose that a further refined application of ChatGPT in aviation safety analysis may eventually serve as an assistant rather than a full replacement of existing systems.

Large language models have also been proposed as a solution to medical field issues. In 2022, researchers at Chungnam National University in South Korea analyzed the potential of BERT for predicting drug-target interactions (DTIs) and expediting the discovery of new drugs, publishing their findings. As explained in the article, the current drug discovery process is “expensive, labor-intensive, and inefficient” and could be expedited by separating promising drug candidates from less certain drug candidates. For this reason, biology researchers have proposed large language models to efficiently predict drug-target interactions, shortening the drug discovery process. Large language models such as BERT present a possible solution to predicting drug-target interactions.

In their fine-tuning of the BERT model, researchers used multiple datasets: BIOSNAP, DAVIS, and BindingDB. Each of these datasets contains different information relating to the DTI process, supplying the model with sufficient contextual information. Specifically, the datasets contain information on 15,243 drugs, 3,973 proteins, and 71,186 interactions. The fine-tuned LLM learns the chemical information in a simplified molecular-input line-entry system (SMILES) format. It uses two transformer-based encoders to convert information into the SMILES format: one for encoding chemical compound information using ChemBERTa and the other for capturing protein information from amino acid sequences using ProtBERT. The encoded information from the two transformer models is then processed to predict drug-target interaction.

To evaluate the proposed fine-tuned model’s performance, researchers used various performance benchmarks. Researchers compared the proposed model’s performance with that of another transformer-based DTI prediction model, MolTrans. The MolTrans dataset was used to ensure a fair comparison of the models. To test the effects of pretraining on the performance of the proposed model, the model was divided into four separate submodels, each using different datasets and pre-trained weights. The measured performance of these models was then compared. The metrics used to compare the models were receiver operating characteristics area under the curve (ROC-AUC), precision-recall area under the curve (PR-AUC), and sensitivity. Essentially, these metrics measure the model’s likelihood to differentiate between positive and negative predictions. Their findings were that the proposed model performed admirably in DTI prediction and could identify likely drug candidates for prioritization in drug-target interaction experiments. Despite the model’s good performance, false positives are still produced by the model. Therefore, the model is not a suitable replacement for the human validation of drug safety and effectiveness and can only serve as an accelerator of the drug discovery process.

The potential of fine-tuned large language models is also being analyzed in the prediction of proton-DNA binding sites, a topic examined by experts in computer science, data science, health, and biology from various institutes in China and Japan. Understanding protein-DNA binding sites is a key issue in genome biology research and is essential to drug development. By predicting protein-DNA binding sites, biologists develop a better understanding of protein-DNA binding.

Traditional biological sequencing techniques used in predicting protein-DNA binding sites are becoming obsolete with the advent of advanced sequencing technologies. “In the past decades,” the researchers noted, “sequencing operations were performed using traditional biological methods, especially ChIP-seq [12] sequencing technology, which greatly increased the quantity and quality of available sequences and laid the foundation for subsequent studies. With the development of sequencing technology, the number of genomic sequences has increased dramatically” (664). Traditional techniques are expensive and inefficient, struggling to process the growing availability of genomic sequences, and the machine learning and deep models meant to replace them have not proved sufficient. For this reason, researchers suggest that large language models are the most tenable solution, offering increased accuracy and processing efficiency.

In the article, researchers compare DNABERT, a model also based on Google’s BERT LLM and fine-tuned for predicting protein-DNA binding sites, with the DeepBind, DanQ, and WSCNNLSTM deep learning models. Unlike traditional machine learning models or other types of deep learning models, DNABERT segments DNA sequences and adds location information, giving DNABERT additional contextual information that machine learning models lack. DNABERT’s protein-DNA binding site prediction performance was confirmed to exceed that of existing machine learning and deep learning models. Despite the advancements observed with DNABERT, researchers noted that there is still work to be done to further improve the model’s accuracy.

In the five years since BERT’s release, large language models have advanced and have been trained to draw context from drastically larger training datasets. A team of researchers comprised of computer science and health experts from universities in the United States and China explored the viability of the Large Language Model Meta-AI (LLaMA) LLM for providing medical advice and diagnostics. Combining the fields of computer science and health science, the ChatDoctor experiment aimed to address the limitations of large language models in providing accurate medical advice. Their initial analysis of the medical potential of large language models identified two primary limitations: training data and access to up-to-date information. “In general,” the researchers noted, “ these common-domain models were not trained to capture the medical-domain knowledge specifically or in detail, resulting in models that often provide incorrect medical responses.” General-domain large language models draw their adaptability from diverse, in-specific training data, rather than focused data relating to specific topics. Even the most prominent LLMs, ChatGPT and LLaMa, do not have active internet access. Additionally, a conventional LLM functions by predicting the next word in a sentence. This approach is effective for general use cases but can result in potential inaccuracies. To address these limitations, the researchers curated two datasets, one targeting the casual language of patients and the other targeting the professional language of doctors.

In a typical medical visit, patients use casual language to describe their symptoms and concerns. Linking the casual language used by patients to professional terminology is vital to improving accuracy. Gathering about 110,000 patient-doctor conversations from the HealthCareMagic and iCliniq online medical consultation websites, researchers manually and automatically filtered the dataset. Overly long conversations and short conversations were excluded. Personal information, including patient and doctor identities, was obscured.

The second dataset contained diseases, symptoms, relevant medical tests and/or treatment procedures, and possible medications. This information was gathered from MedlinePlus and can be continually updated without requiring model re-training, which allows for ChatDoctor to be further customized for specific ailments and medical subjects. In addition to curating offline datasets, the researchers also enabled ChatDoctor with active internet access to databases such as Wikipedia. ChatDoctor is instead based on Meta’s open-source LLaMa LLM. The LLaMa-7B model that ChatGPT is based on has 7 billion parameters compared to ChatGPT’s 175 billion while exhibiting comparable performance.

To test the performance of ChatDoctor, researchers prompted the model with independently sourced questions from iCliniq. Actual responses from human doctors served as a control model to compare with the ChatDoctor responses and ChatGPT responses. Utilizing a system called BERTScore, researchers compared the closeness of generated responses to human responses. The resulting findings were that ChatDoctor is more accurate than ChatGPT and more capable of generating coherent medical responses using the correct vocabulary. However, both models experience the common limitations of large language models and generative artificial intelligence as a whole. Timothy B. Lee and Sean Trott of ArsTechnica explain: “Conventional software is created by human programmers, who give computers explicit, step-by-step instructions. By contrast, ChatGPT is built on a neural network that was trained using billions of words of ordinary language.” The outputs of large language models are calculated with billions of variables, resulting in a system so complex that no person alive today fully understands how it works. Consequently, the researchers behind ChatDoctor state that “actual clinical use is subject to the risk of wrong answers being output by the model, and the use of exclusively LLMs in medical diagnosis is still plagued by false positives and false negatives for the time being.” Therefore, the use of ChatDoctor would be limited to assistance with in-person medical consultations rather than serving as a complete replacement for existing methods of consultation.

Large language models exhibit potential in several fields, including aviation safety analysis, drug discovery, and medical consultation. In the not-too-distant future, large language models could improve the efficiency and safety of air travel, reduce the time required to identify and test new drugs, or even redefine how patients receive medical advice and diagnoses. Pre-training new large language models for specific roles requires vast financial backing, time dedication, and expertise. Fine-tuning pre-trained models provides a more accessible approach, reducing the financial, time, and expert requirements. However, the immense complexity of large language models presents challenges in understanding and controlling their outputs. As experimental implementations of large language models in drug discovery, safety report analysis, and medical consultation have shown, large language models trade accuracy for adaptability. Although LLMs are capable of increasing the efficiency of existing processes, their inaccuracy rate remains non-zero and necessitates human validation. While large language models are adaptable and can perform many tasks including identifying likely drug candidates, summarizing aviation safety reports, and providing medical advice, they are equally capable of generating potentially dangerous results. Therefore, large language models may serve as valuable tools in a wide range of implementations, but their integration must be approached with caution and in most cases cannot fully replace existing systems.

No comments yet.